In the rapidly evolving field of AI, Large Language Models (LLM)’s like LLaMa and the open source inference engine, LLaMa.cpp, are quickly becoming instrumental in bridging the gap between cutting-edge AI models and their practical deployment on common architectures. We’ll describe LLaMa’s significance, uncover the benefits of LLaMa.cpp, and examine their relevance to z/OS, as well as explore the challenges of adapting the LLaMa model and the LLaMa.cpp inference engine to the z/OS platform.

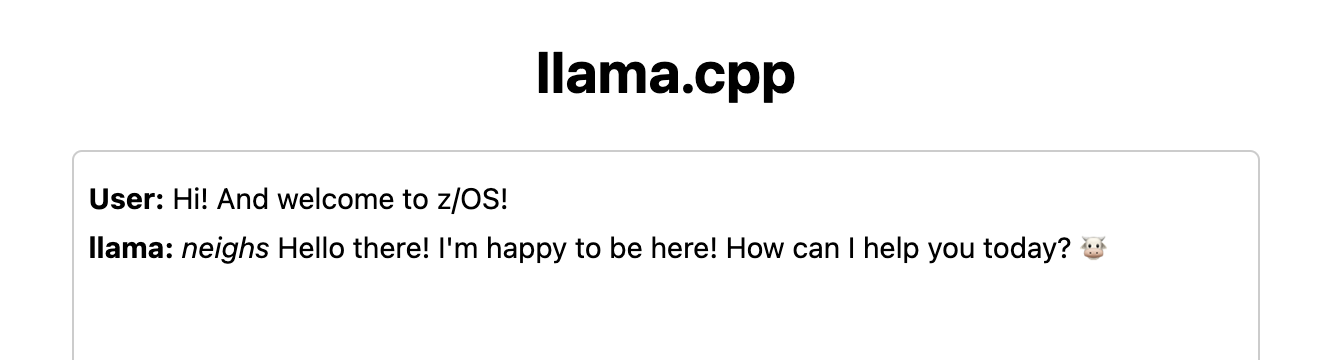

LLaMa.cpp's server program running on z/OS

LLaMa.cpp's server program running on z/OS

The LLaMa Model

LLaMa, or Large Language Model Meta AI, was introduced in February 2023 by Meta. The LLaMa set of large language models (LLMs) quickly gained attention for its ability to generate human-like text and comprehend vast language data, with very similar accuracy to ChatGPT.

Unlike OpenAI’s ChatGPT/GPT-4 models, which have remained closed, Meta opened up its LLaMa v1 model to the community under a noncommercial license.

Then, in July 2023, Meta unveiled LLaMa 2, introducing models with 7 billion, 13 billion, 33 and 70 billion parameters, and this time made its LLM models available for commercial use as well. Additionally, Llama 2 models were trained with 40% more data, leading to much better results than before.

Meta also released models that were fine-tuned for chat, including Llama-2-7b-chat and Llama-2-13b-chat, which were fine-tuned using reinforcement learning from human feedback.

LLaMa Models and Performance

The LLaMa models achieved great scores across the board, as evidenced by Hugging Face’s LLM leaderboard. The Hugging Face leaderboard measures and tracks the top open LLM models.

The model with the best trade-off, though, is LLaMa’s 13-billion parameter model, which outperformed much larger models like GPT-3 (with 175 billion parameters) in various language tasks, but with the benefit of being able to run it on lower cost systems!

Can we run it on a CPU Based Architecture?

Until now, it has been widely assumed that a high-powered GPU is necessary for performing efficient transformations and inferencing. However, LLaMa.cpp has changed the game by enabling CPU-based architectures to run LLM models at a reasonable speed!

Introducing LLaMa.cpp - A Game Changer in AI

LLaMa.cpp, an open source LLaMa inference engine, is a new groundbreaking C++ inference engine designed to run LLaMa models efficiently.

It achieves this through its use of quantization. Quantization is used to reduce the precision of the model weights. This saves memory and makes calculations much faster. For LLaMa models, such as Llama 2-7B, quantization reduces the memory requirements from 13Gb to 4gb and for the LLaMa-13B model, from 24 GB to 7.8gb.

If you’re interested in learning more about the quantization method used in LLaMa.cpp, the article How is LLaMa.cpp possible? does a great job explaining it.

Additionally, LLaMa.cpp is written in C++ and provides C headers to its interfaces. This means that you can bind it to your favourite languages, such as Node.js, Go, and Python!

Performance Across Devices

LLaMa Benchmark reports reveal significant improvements over the original models, in some cases offering up to 3-4 times speedup from quanitization.

Their github page also reports the following statistics across the various quantization methods:

| Model | Measure | F16 | Q4_0 | Q4_1 | Q5_0 | Q5_1 | Q8_0 |

|---|---|---|---|---|---|---|---|

| 7B | perplexity | 5.9066 | 6.1565 | 6.0912 | 5.9862 | 5.9481 | 5.9070 |

| 7B | file size | 13.0G | 3.5G | 3.9G | 4.3G | 4.7G | 6.7G |

| 7B | ms/tok @ 4th | 127 | 55 | 54 | 76 | 83 | 72 |

| 7B | ms/tok @ 8th | 122 | 43 | 45 | 52 | 56 | 67 |

| 7B | bits/weight | 16.0 | 4.5 | 5.0 | 5.5 | 6.0 | 8.5 |

| 13B | perplexity | 5.2543 | 5.3860 | 5.3608 | 5.2856 | 5.2706 | 5.2548 |

| 13B | file size | 25.0G | 6.8G | 7.6G | 8.3G | 9.1G | 13G |

| 13B | ms/tok @ 4th | - | 103 | 105 | 148 | 160 | 131 |

| 13B | ms/tok @ 8th | - | 73 | 82 | 98 | 105 | 128 |

| 13B | bits/weight | 16.0 | 4.5 | 5.0 | 5.5 | 6.0 | 8.5 |

As you can see, going from F16 (16-bit float) to Q4_0 quantization gets you a 1.5 times speedup. Also, perplexity which is a common metric for measuring how effective an LLM is, does not suffer greatly from quantization! Awesome!

The power of LLaMa.cpp has already been evidenced in the field with these examples:

- On a Pixel 5, the 7B model manages 1 token per second

- An M2 MacBook Pro achieves around 16 tokens per second with the same model

LLaMa Meets z/OS

z/OS, the operating system for IBM Z mainframes, serves as the backbone for critical operations across many industries. Data is everything, and z/OS systems process a lot of data. Merging AI with z/OS opens new doors for business and financial intelligence. IBM is already doing a fantastic job at this by introducing the Python AI Toolkit for IBM z/OS as well as WatsonX, which also plans to make the LLaMa 2 models available on its platform!

LLaMa.cpp, is yet another option and it aligns well with z/OS’s CPU-based architecture and large memory cache.

One other reason for running LLaMa.cpp locally on z/OS is security. Data on z/OS machines is typically sensitive, and as such, many clients choose to air-gap their systems. (Air-gapping isolates a computer or network from external connections). For sensitive industries like finance and healthcare, local AI models are critical for security by limiting exposure to external threats.

I’m convinced! let’s port LLaMa.cpp to z/OS

Let’s now discuss how we can get LLaMa.cpp to work on z/OS.

Porting LLaMa.cpp to z/OS isn’t without its difficulties. Challenges include:

-

C Runtime Adaptation: Porting C/C++ code involves dealing with a different C runtime on z/OS, known as the Language Environment (LE). Fortunately, in the case of LLaMa.cpp, we were able to leverage the zoslib library, which bridges the gap between the Linux C runtime and the z/OS C runtime. ✅

-

Missing Tools: LLaMa.cpp has dependencies on common build tools such as GNU Make and CMake. These tools are not natively available on z/OS. However, we were able to leverage the work from the zopen community and use these tools to build LLaMa.cpp with no issues. ✅

-

Endianness Issues: 99% of the open LLM models, including LLaMa’s models, are in little endian format. IBM Z is a big endian platform. Therefore, we need a mechanism to convert models from little endian to big endian. Either the models need to be converted as a pre-step prior to inferencing, or the inferencing engine needs to be able to auto convert little endian models to big endian. Let’s examine both approaches in more detail.

For a look at the patches applied to get LLaMa.cpp to work, visit llamacppport. (Note, this was hacked in a weekend. :) I list the future consideration at the end of this blog).

Approach 1 - Converting models from little endian to big endian

LLaMa.cpp requires models to be in its own unique GGJT format (soon to be updated to GGUF), and it provides a handy script, convert.py that can convert any LLaMa model to this format. Fortunately, this provided a way for us to additionally write out the content in big endian format after conversion.

For example, the conversion script, which was written in Python, writes out 32-bit integer values as follows:

1

self.fout.write(struct.pack("i", len(text)))

Adding an “!” swapped the bytes so that it would be written out in big endian order.

1

self.fout.write(struct.pack("!i", len(text)))

I ran the conversion script on Linux x86, but if we were to run it on IBM Z, we would swap the bytes at read-time, as opposed to write-time.

However, I wasn’t very happy with this approach because it meant that I couldn’t directly download a model from huggin face and have it just work.

Approach 2 - Modifing the LLaMa.cpp code to dynamically convert models to big endian

When the LLaMa.cpp inference engine starts up, it reads the model into memory. It provides routines to read float and integer data. Therefore, after reading integer or float data, we could swap the bytes to match the native endian format, big endian. This required some knowledge of the tensor weight format, but with a little bit of work, we wre able to get it to work!

For a closer look at how this was achieved, view the patches here.

LLaMa running on z/OS!

After successfully applying these changes, we are now able to run LLaMa.cpp on z/OS!

If you’re interested in installing LLaMa.cpp, you can download it using zopen as follows:

1

zopen install llamacpp

or download the pax.Z release.

In my example below, I used the model “Llama-2-7b-chat”. I downloaded the little endian model that was already converted and quantized from https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML.

I used zopen’s curl to download it to z/OS as follows:

curl -L -O https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML/resolve/main/llama-2-7b-chat.ggmlv3.q4_0.bin

Note, to proceed to the next steps, you will need at least 4GB of above the bar memory to proceed. Adjust your MEMLIMIT if necessary.

Now we’re ready to try it out!

To demonstrate a typical financial scenario, I asked it to calculate the total expenses as follows:

I have 1000 employees. Each one makes $80k/year. I also pay $100k/year for my property. What are my yearly expenses?

I issued it as follows:

$PATH_TO_LLAMACPP/main -m llama-2-7b-chat.ggmlv3.q4_0.bin -n 128 "I have 1000 employees. Each one makes \$80k/year. I also pay \$100k/year for my property. What are my yearly expenses?"

The result was:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

system_info: n_threads = 12 / 24 | AVX = 0 | AVX2 = 0 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 0 | NEON = 0 | ARM_FMA = 0 | F16C = 0 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 0 | VSX = 0 |

sampling: repeat_last_n = 64, repeat_penalty = 1.100000, presence_penalty = 0.000000, frequency_penalty = 0.000000, top_k = 40, tfs_z = 1.000000, top_p = 0.950000, typical_p = 1.000000, temp = 0.800000, mirostat = 0, mirostat_lr = 0.100000, mirostat_ent = 5.000000

generate: n_ctx = 512, n_batch = 512, n_predict = 128, n_keep = 0

I have 1000 employees. Each one makes $80k/year. I also pay $100k/year for my property. What are my year expenses?

$100,000 (property) + ($80,000 x 1000 employees) = $8,100,000

So your yearly expenses are $8,100,000. [end of text]

llama_print_timings: load time = 22215.58 ms

llama_print_timings: sample time = 98.91 ms / 60 runs ( 1.65 ms per token, 606.60 tokens per second)

llama_print_timings: prompt eval time = 24075.94 ms / 41 tokens ( 587.22 ms per token, 1.70 tokens per second)

llama_print_timings: eval time = 34295.69 ms / 59 runs ( 581.28 ms per token, 1.72 tokens per second)

llama_print_timings: total time = 58579.91 ms

Terrific! It worked!

Now let’s make it an interactive session! And let’s see if it can write code for us!

Let’s ask it to write a C program that prints out Hello World.

./bin/main -m ../llama-2-7b-chat.ggmlv3.q4_0.bin -n 125 -i -p "[INST] <<SYS>> You are a helpful, respectful and honest assistant. <</SYS>> Write a C program that prints Hello World. [/INST]"

The -i option make it interactive.

This is the output that we got:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

INST] <<SYS>> You are a helpful, respectful and honest assistant. <</SYS>> Write a Java program that prints Hello World. [/INST] Of course! Here is a simple Java program that prints "Hello World":

public class HelloWorld {

public static void main(String[] args) {

System.out.println("Hello World!");

}

}

To compile and run this program, you will need a Java compiler (such as `javac`) and a Java runtime environment (such as `java`). You can also use an Integrated Development Environment (IDE) such as Eclipse or NetBeans to write, compile, and run your program.

Here is how you could compile and runCan you continue

To compile the program, you can use the following command in the terminal or command prompt:

javac HelloWorld.java

This will create a `HelloWorld.class` file in the current directory, which you can then run using the following command:

java HelloWorld

This will print "Hello World!" to the console.

I hope this helps! Let me know if you have any questions or need further assistance.

It worked! On a heavily used LPAR with 24 CPUs, I was able to get a token rate of 500ms/token, which was not too bad.

Now, let’s ask it to turn our unstructured data (text) into structured data (JSON).

[INST] <<SYS>> You are a helpful, respectful and honest assistant. <</SYS>> Can you create a json file representing the following information: IBM revenue for the twelve months ending June 30, 2023 was 5.25B, a 1.42% increase year-over-year. IBM annual revenue for 2022 was 5.3B, a 5.54% increase from 2021. IBM annual revenue for 2021 was 7.35B, a 3.93% increase from 2020. IBM annual revenue for 2020 was 5.179B, a 4.39% decline from 2019.

We get the following output:

1

2

3

4

5

6

7

8

9

10

11

12

{

"Revenue": {

"Annual Revenue": [

{

"Year": 2023,

"Revenue": 5.25B

},

{

"Year": 2022,

"Revenue": 5.3B

},

...

Impressive!

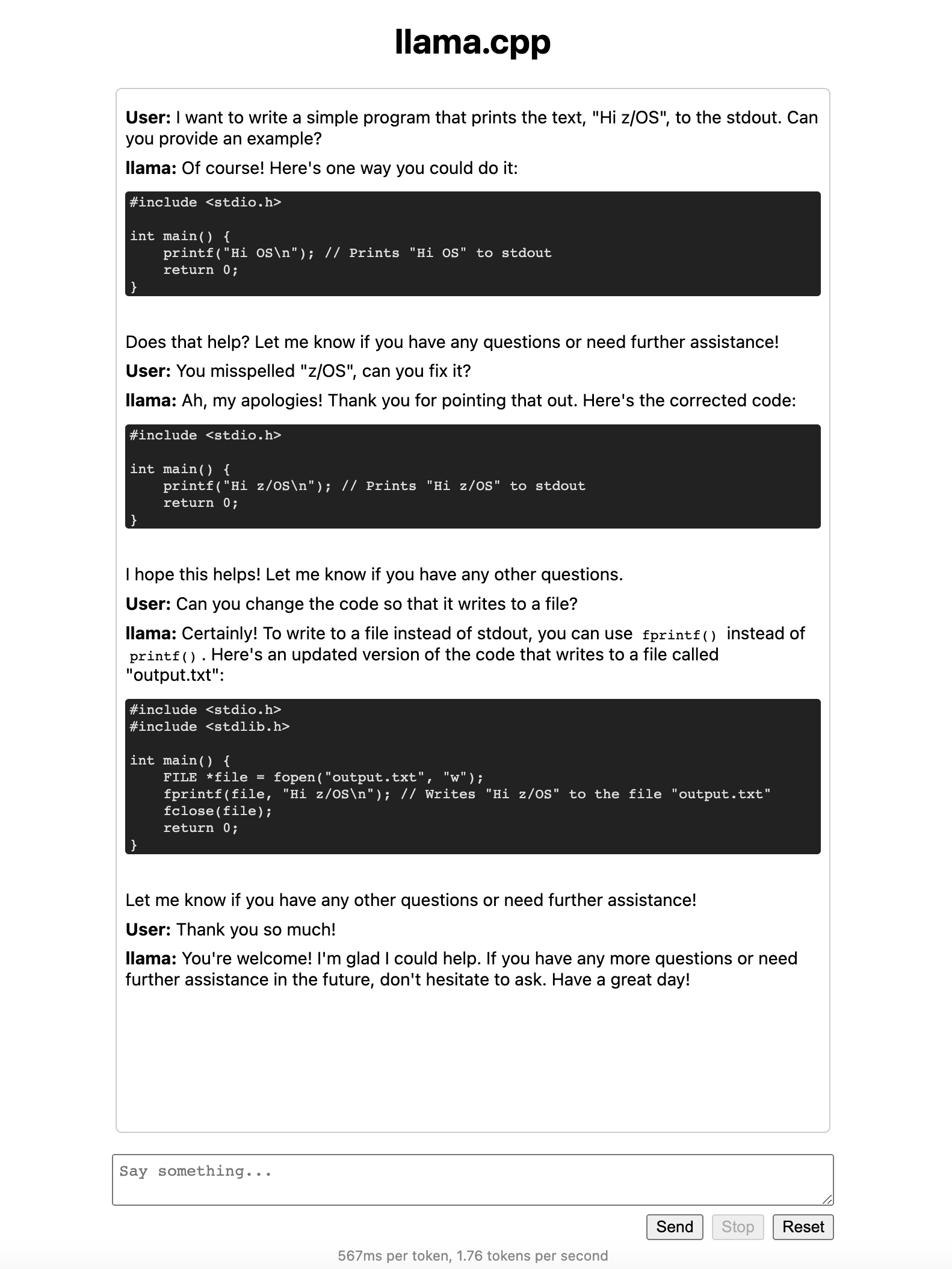

LLaMa.cpp also features an example chatbot that can be run via it’s server executable.

Run it as follows:

1

server -m llama-2-7b-chat.ggmlv3.q4_0.bin

Here’s an example dialogue with z/OS running the inference engine!

LLaMa.cpp's server program running on z/OS

LLaMa.cpp's server program running on z/OS

Future Work

- In order to perform the dynamic convertion from little endian to big endian, we had to disable the mmap. Enabling mmap support would allow for the model to load much faster. Revisit Approach 1 so that we can optimize start-up time.

- Performance improvement considerations: LLaMa.cpp supports the BLAS library for math speedup. It also supports SIMD (AVX2), but this needs to be enabled on s390x.

- Add an option to convert models to big endian, rather than always dynamically converting to big endian.

- Upstream the effort to the LLaMa.cpp community

Special Thanks

Thank you to Haritha D and Mike Fulton for contributing to this effort!

-

Previous

WeAreDevelopers World Congress - Where Creativity Meets Technology -

Next

Exploring ZOSLIB: An open source z/OS library for porting